| Home | People | Research | Publications | Demos |

| News | Jobs |

Prospective

Students |

About | Internal |

| Optimal Features for Large-scale Visual Recognition | |

|

|

|

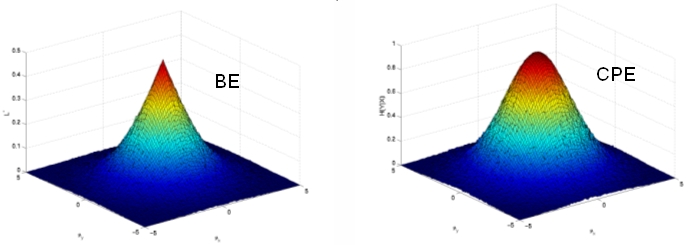

The problem of visual recognition is naturally formulated as one of statistical classification: given an image, the role of the recognizer is to classify it into one of a set of previously defined visual classes, hopefully with the least possible error. Under this formulation, there are two fundamental components of a recognition architecture: 1) the feature space (space of measurements where the classification takes place), and 2) the classifier architecture (e.g. a neural network, support vector machine, or “plug-in” classifier). While the last decades have provided significant insight on the design of optimal architectures, the design of optimal feature spaces (in the minimum probability of error sense) still remains an art-form. This is problematic, since no architecture will perform well if the feature set is poor. Mathematically, the choice of features determines the so-called “Bayes error”, which is the lowest possible probability of error achievable (by any architecture) on the corresponding feature space. The minimization of the Bayes error is, therefore, a natural optimization criterion for feature design. Unfortunately, it also is usually not easy to work with and many alternative criteria have been proposed in the literature. These criteria have many limitations, such as being applicable only to certain types of problems (e.g. assuming Gaussian classes), leading to optimization procedures that are too costly, or being unrelated to the goal of minimizing the probability of error (e.g. principal or independent components decompositions). One alternative criterion that has been advocated in the cognitive science literature (and widely used in communications problems) is based on Shannon’s definition of information: the maximization of the mutual information between features and class labels. This project studies three interesting properties of this criterion. The first is the existence of very close connections between it and the maximization of Bayes error. The second is that it establishes a natural formalism for the theoretical study of the two properties that most impact the quality of a feature set: discriminant power vs. feature independence. This, in turns, enables understanding of how knowledge on the statistics of natural images can be exploited to achieve optimal feature design algorithms of reduced complexity. The project has produced various theoretical insights as well as efficient algorithms for information theoretic feature selection that are scalable to problems with large numbers of classes. |

|

| Selected Publications: |

|

| Demos/ Results: |

|

| Contact: | Manuela Vasconcelos, Nuno Vasconcelos |

![]()

©

SVCL