| Home | People | Research | Publications | Demos |

| News | Jobs |

Prospective

Students |

About | Internal |

| Person-following UAVs | ||

|

We consider the design of vision-based control algorithms for unmanned aerial vehicles (UAVs), so as to enable a UAV to autonomously follow a person. A new vision-based control architecture is proposed with the goals of 1) robustly following the user and 2) implementing following behaviors programmed by manipulation of visual patterns. This is achieved within a detection/tracking paradigm, where the target is a programmable badge worn by the user. This badge contains a visual pattern with two components. The first is fixed and used to locate the user. The second is variable and implements a code used to program the UAV behavior. A biologically inspired tracking/recognition architecture, combining bottom-up and top-down saliency mechanisms, a novel image similarity measure, and an affine validation procedure, is proposed to detect the badge in the scene. The badge location is used by a control algorithm to adjust the UAV flight parameters so as to maintain the user in the center of the field of view. The detected badge is further analyzed to extract the visual code that commands the UAV behavior. This is used to control the height and distance of the UAV relative to the user. |

||

|

||

|

This figure illustrates the vision-based UAV control architecture. The user wears a badge, which is detected and localized in real-time. The differences between the badge position/size and pre-specified target values act as error signals for a control algorithm. This issues drone flight commands, so as to align badge position and size with target values. The badge displays a control (control bits) and a behavior program (program bits) pattern. The former is used for badge detection, the latter (binary code for 48, 573 in this example) to specify drone behaviors. |

||

|

||

|

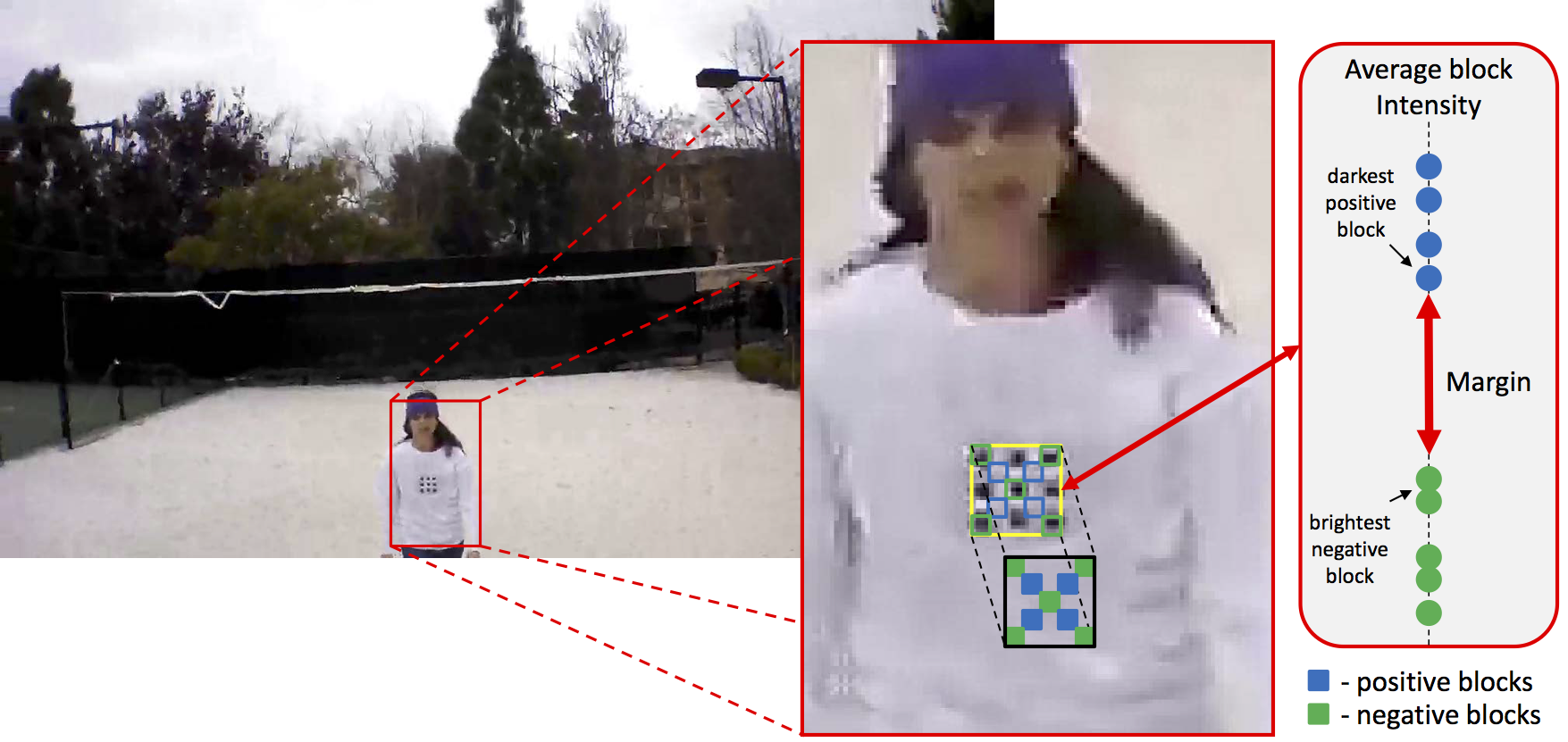

In this work, we developed a novel margin similarity measurement. The average intensity of each badge square is computed. Blocks corresponding to white (black) squares are denoted positive (negative). The margin is the difference between the average intensity of the darkest positive and brightest negative blocks. This makes the algorithm robust to changes in lighting and reduces false detections. |

||

|

||

|

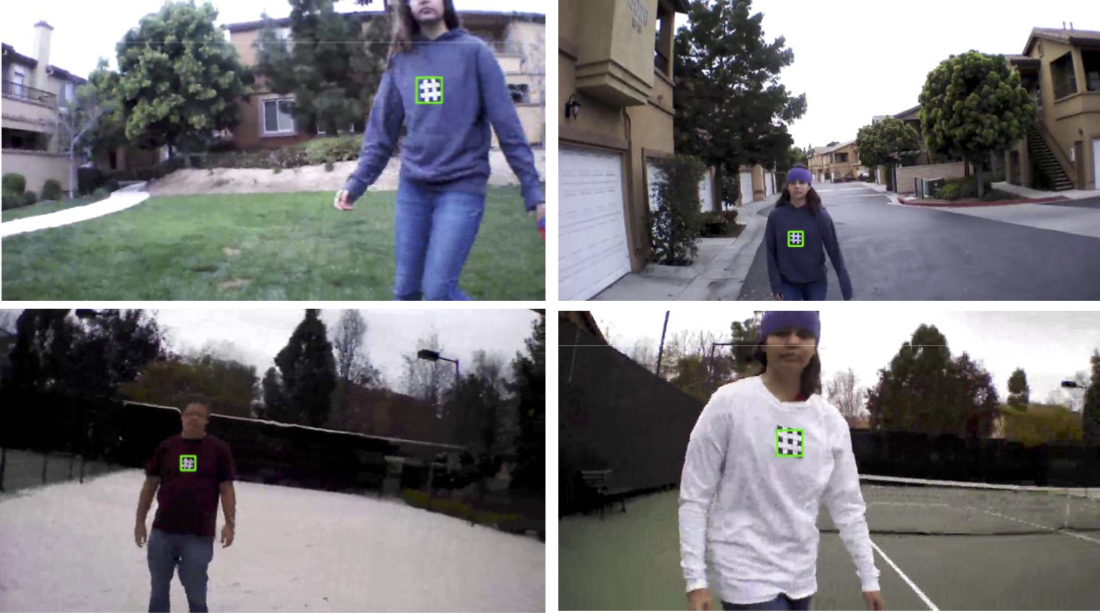

These are example detections (green bounding boxes) from the badge detection dataset. Note the diversity of subject orientations, distance to the camera, and background clutter. The image dataset can be found in the links below. |

||

|

||

|

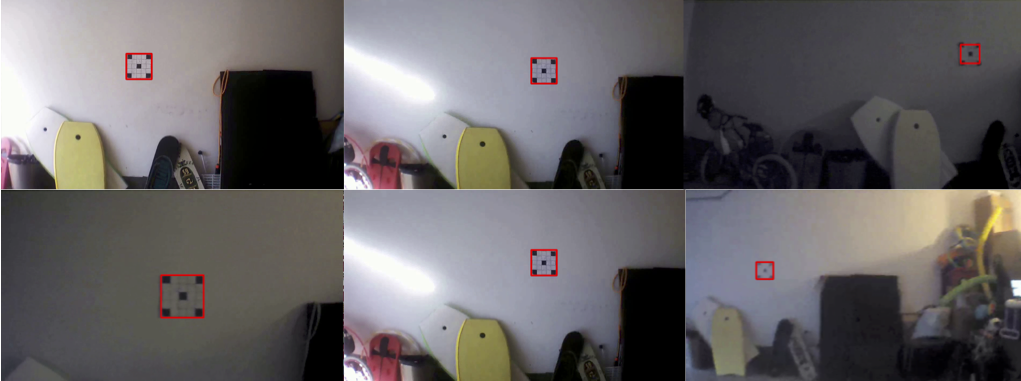

Different badge-drone distances and scene illuminations were recorded to test the tracking robustness of the badge detection algorithm. Top: images collected at the same distance for different illuminations. Bottom: images collected at multiple distances. These video datasets can be found in the links below. |

||

|

||

|

Experiments were conducted on the overall performance of the UAV control algorithm. Top: the UAV follows a user as she moves in 3D space. Bottom: The user successively displays two badges that command the UAV to move closer or farther away. In all images, a green (yellow) ellipse is used to indicate the position of the drone (badge). Videos of these experiments are available in the links below. |

||

| VIDEOS | ||

| DATASETS |

Image Badge Detection Dataset Download Video Badge Tracking Dataset Download |

|

| PUBLICATION |

Person-following UAVs F. Vasconcelos and N. Vasconcelos In proceedings of IEEE Winter Applications of Computer Vision Conference (WACV), Lake Placid, New York, USA - Mar. 2016 © IEEE [PDF] |

|

![]()

©

SVCL