|

This page presents a collection of results

obtained with the discriminant saliency model. We briefly review the model and

then show how it performs on texture and object databases.

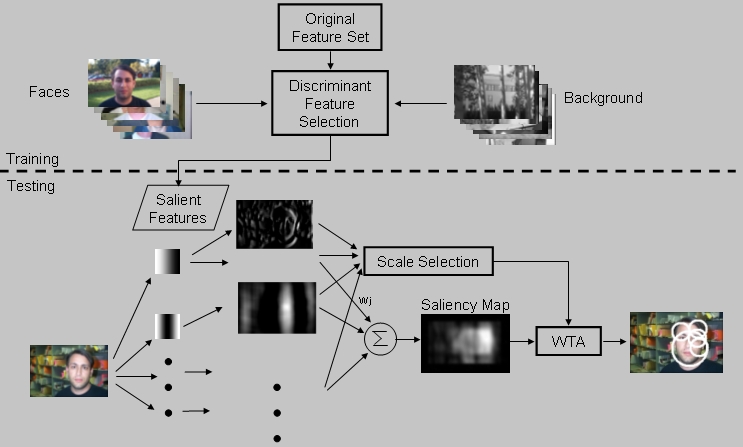

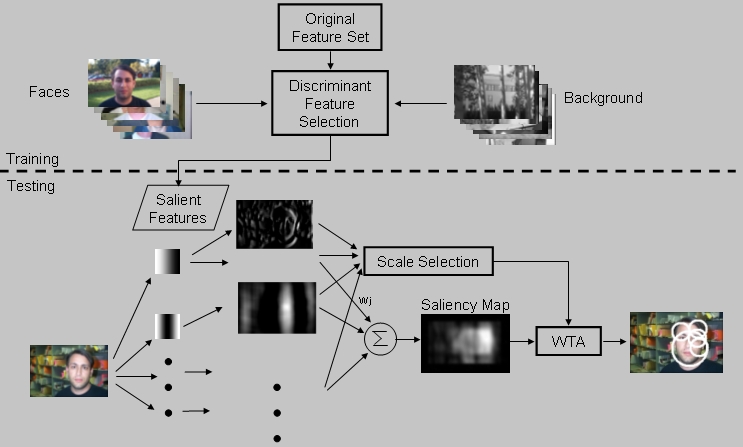

Discriminant Saliency Model

Discriminant saliency is defined with respect to a recognition problem: the

salient features of a given visual class are the features that most distinguish

it from all other visual classes. As shown in the following figure, the saliency

detection model consists of two steps. The first is the selection of

discriminant features. The second consists of a biologically inspired model for

1) translating the image responses of those features into a saliency map (which

assigns a degree of saliency to each image pixel) and

2) using that map to determine the most salient image locations.

Examples from the Brodatz

texture database

Examples of saliency maps for various textures are shown here. It can be seen from

these examples that discriminant saliency can 1) ignore highly textured backgrounds in favor of more salient foreground objects, and 2) detect as salient a wide variety of shapes,

and contours of

different crispness and scale, or even texture gradients.

Examples from the

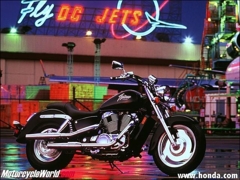

Caltech object database

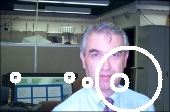

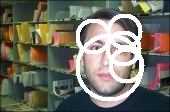

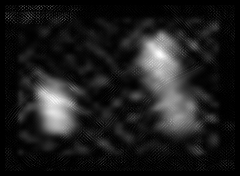

The following are saliency maps and top salient locations detected by discriminant saliency

on various images from the Caltech object database. For comparison, we also

present the salient locations detected by other methods that are popular in the

saliency literature (Multiscale Harris, and Scale Saliency).

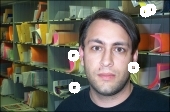

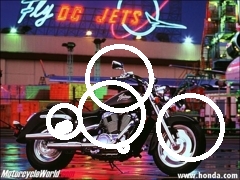

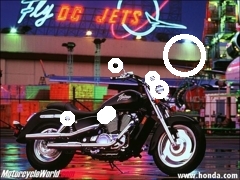

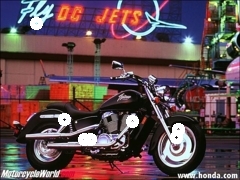

| Original Image |

Saliency Maps by Discriminant Saliency |

Salient Locations by Discriminant Saliency |

Salient Locations by Scale Saliency |

Salient Locations by Multiscale Harris |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Robustness on the

Caltech object database

Here are more saliency detection results on Caltech Database. These are intended

to give an idea of the robustness of discriminant saliency.

Robustness to 3-D Rotation

The following are examples from the

Columbia Object Image Library (COIL), which is

a good dataset for testing

the robustness of salient locations to 3-D rotation. It can be seen that discriminant saliency

declares as salient neighborhoods whose appearance is consistent among different views of an object.

In these examples, the rotation between

adjacent images is of 5 degrees, and the top 10 salient locations are

identified.

|

![]()