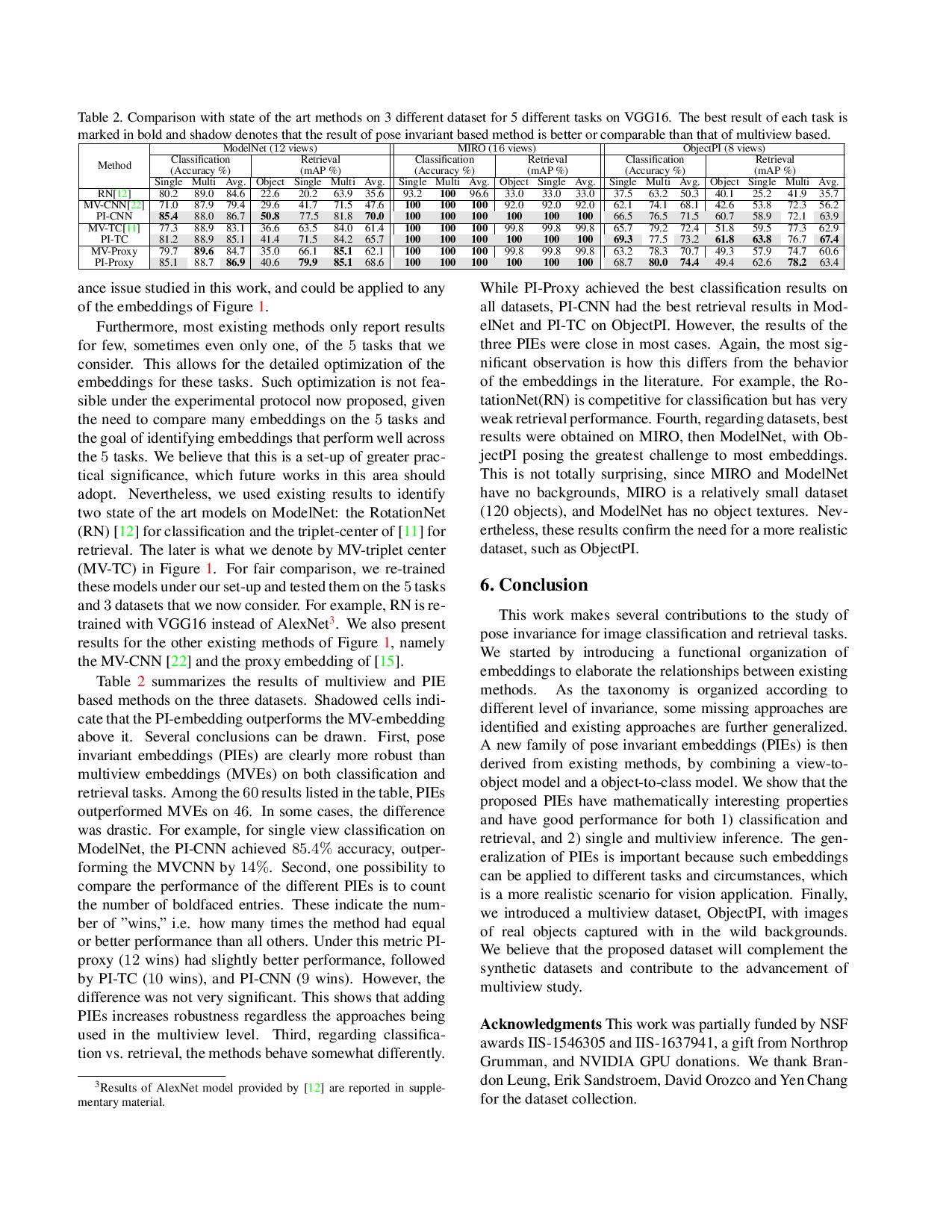

Abstract

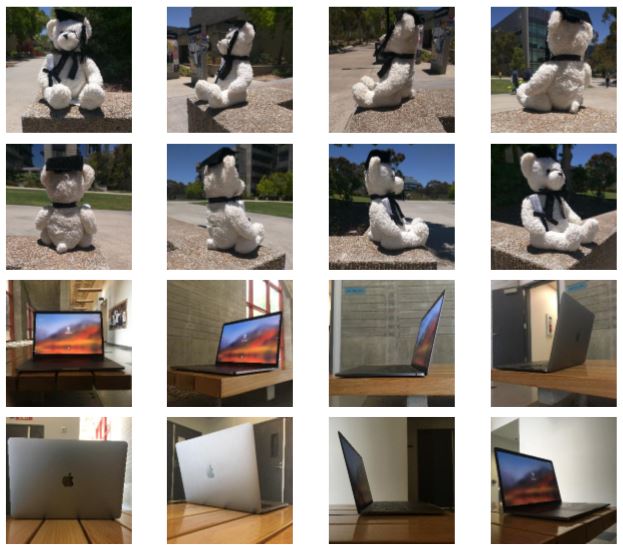

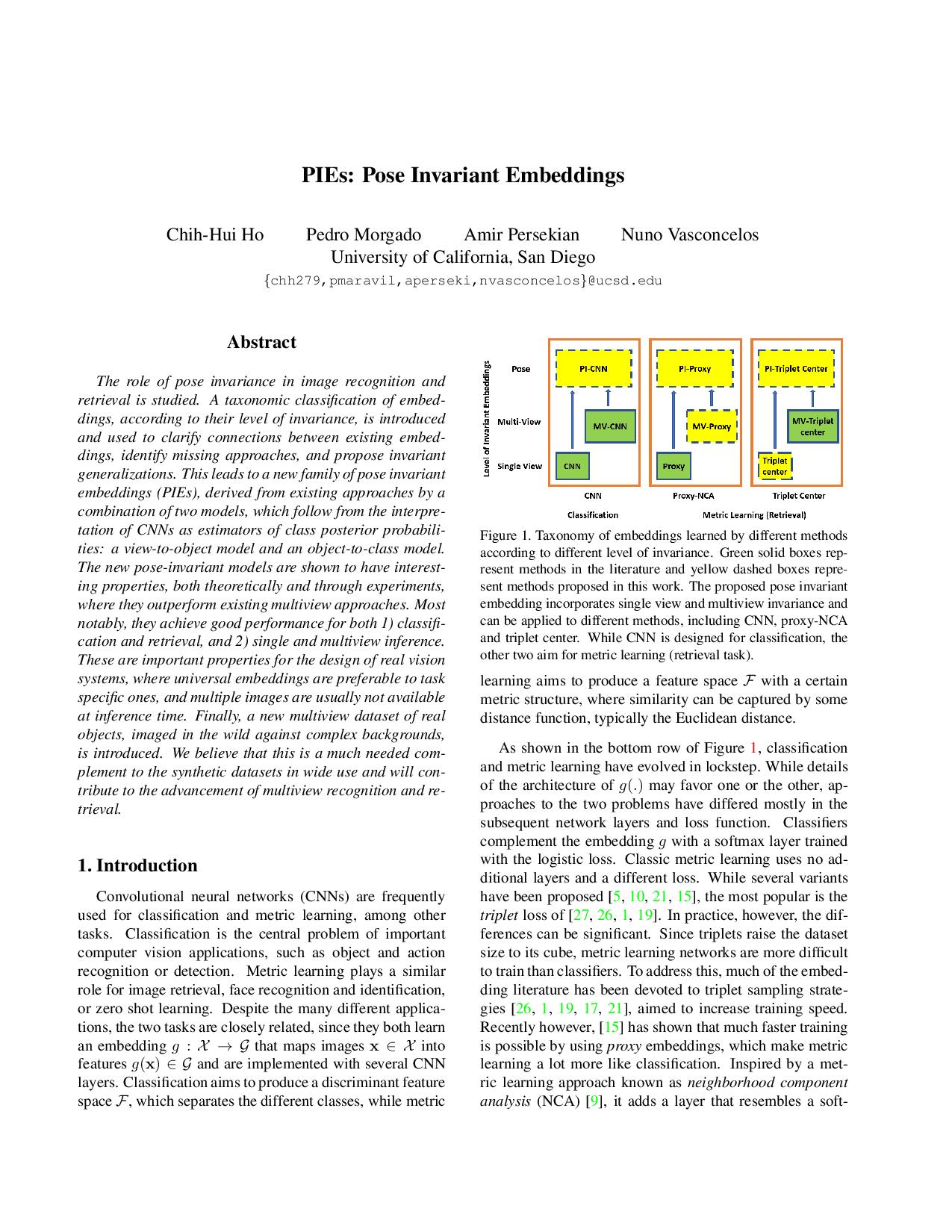

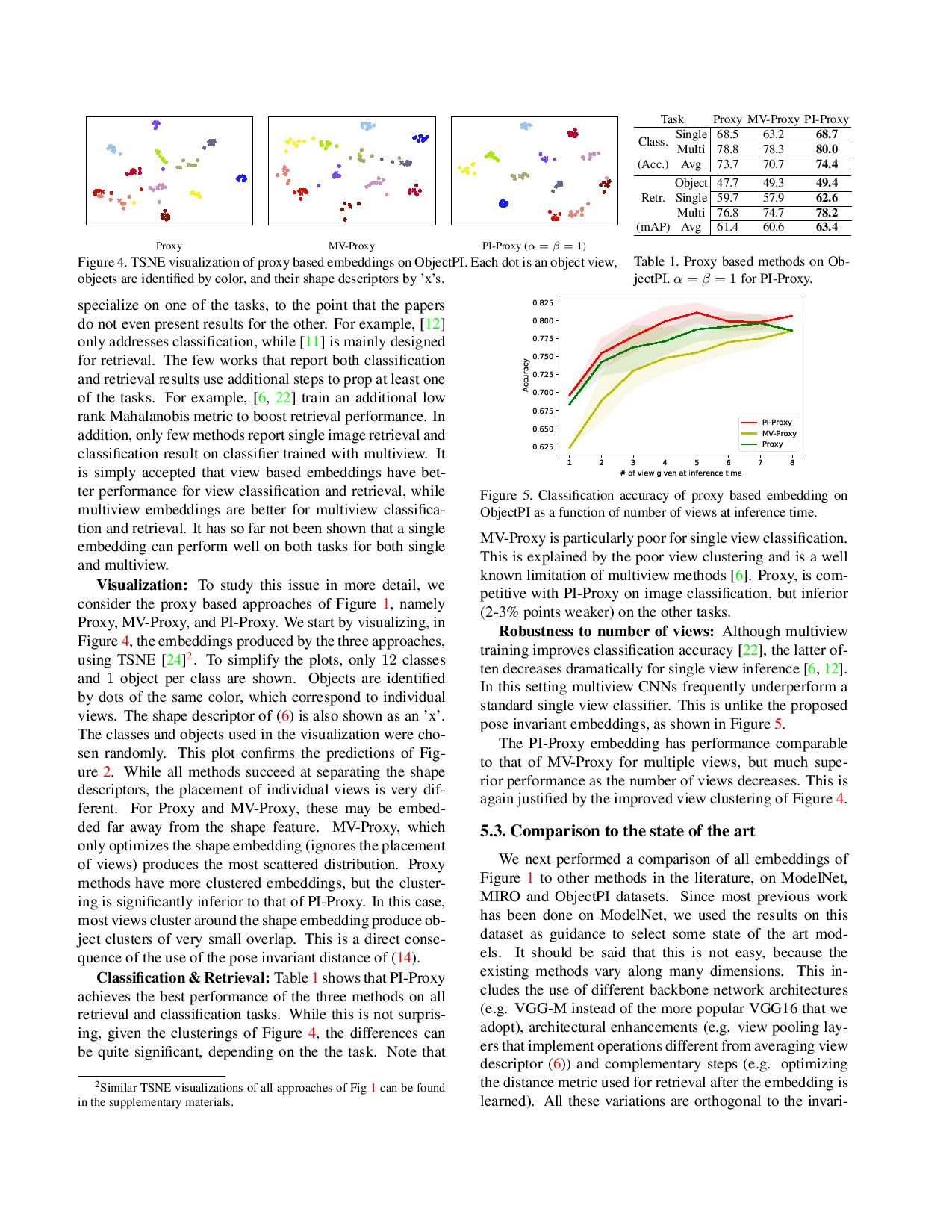

The role of pose invariance in image recognition and retrieval is studied. A taxonomic classification of embeddings, according to their level of invariance, is introduced and used to clarify connections between existing embeddings, identify missing approaches, and propose invariant generalizations. This leads to a new family of pose invariant embeddings (PIEs), derived from existing approaches by a combination of two models, which follow from the interpretation of CNNs as estimators of class posterior probabilities: a view-to-object model and an object-to-class model. The new pose-invariant models are shown to have interesting properties, both theoretically and through experiments, where they outperform existing multiview approaches. Most notably, they achieve good performance for both 1) classification and retrieval, and 2) single and multiview inference. These are important properties for the design of real vision systems, where universal embeddings are preferable to task specific ones, and multiple images are usually not available at inference time. Finally, a new multiview dataset of real objects, imaged in the wild against complex backgrounds, is introduced. We believe that this is a much needed complement to the synthetic datasets in wide use and will contribute to the advancement of multiview recognition and retrieval.

OOWL "In the Wild"

CVPR 2019

PIEs: Pose Invariant Embeddings

Chih-Hui Ho,

Pedro Morgado,

Amir Persekian,

Nuno Vasconcelos

Paper

Paper

Supplementary material

Supplementary material

Poster

Poster

Code

Code

@InProceedings{Ho_2019_CVPR,

author = {Ho, Chih-Hui and Morgado, Pedro and Persekian, Amir and Vasconcelos, Nuno},

title = {PIEs: Pose Invariant Embeddings},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2019}

}

Acknowledgements

This work was partially funded by NSF awards IIS-1546305 and IIS-1637941, a gift from Northrop Grumman, and NVIDIA GPU donations.

Paper

Paper

Supplementary material

Supplementary material

Poster

Poster

Code

Code